Artificial Intelligence (AI): An Overview

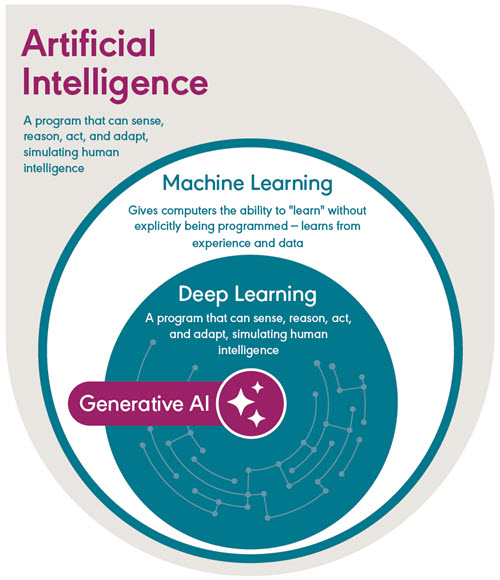

Artificial intelligence (AI) is the umbrella term for technologies that let machines mimic human behaviors like learning, problem solving, and completing tasks that we used to think only humans could do. Under that umbrella, there are multiple types of algorithms, including:

- Machine learning (ML) is where computers “learn” patterns from data instead of relying on step-by-step programming. You’ll already see ML in some assessment tools and commercial platforms that adapt as they collect more data. Examples include streaming app recommendations, facial recognition, and targeted ads you might see on social media.

- Deep learning is a subset of ML, powered by layered neural networks; it’s the engine under the hood of most generative AI systems. Voice assistants, navigation apps, and tools that provide digital translation and transcription use deep learning.

Generative AI models use statistical patterning from massive reference datasets to generate text, images, audio, and many other new outputs. These outputs are not lookups—or a return of existing information—but new content based on the patterns and structure of their training datasets.

Source: Start Small, Dream Big: Getting Started With Generative AI (Reid, 2025).

A large language model (LLM) is a generative AI tool—specifically, a deep learning model—that uses massive amounts of text data to train the LLM to understand, generate, and manipulate human language.

The LLM can perform various tasks like these—and more:

- generating text

- translating text

- summarizing complex content

Many audiologists and SLPs who work in the United States are piloting and using generative AI large language models (LLMs) to support a variety of clerical and sometimes clinical tasks.

Generative AI tools are just that—tools. Generative AI cannot replace your clinical judgment or human connection.

How AI Is Trained

Generative AI tools, such as LLMs, create responses by predicting text based on the information or data from their “training.” Training in this context means inputting specific information or data into a generative AI program from which answers will be mined and presented to the user. These data often include publicly available content from websites and user interactions, which can impact the accuracy and relevance of the response. The data sets that commercial LLMs use are not specifically curated for the Communication Sciences and Disorders (CSD) field. This is particularly relevant for new codes, legislation, or evolving standards in health care delivery.

Clinicians should be aware of two primary limitations.

Accuracy and Relevance

- Fabricated information: Generative AI models may generate “hallucinations”—plausible sounding statements, images, or other content that contain false information or disinformation.

- Inaccurate Information: The information used to generate a response may include misinformation, disinformation, or factually inaccurate content.

- Domain gaps: Rare disorders or specialized clinical protocols may be underrepresented in training data, leading to superficial or generic outputs that do not consider the individual client, patient or student.

- Outdated information: Early LLMs were trained on data that might be outdated. LLMs do not “forget” old information and may generate misleading content risking the exclusion of updated guidelines, policies, and innovations.

Representation in Training Data

- Under-representation of marginalized populations: Training sets may not reflect the full range of dialects, languages, needs, and cultural contexts encountered in practice.

- Potential for biased language: Without careful review, outputs may perpetuate stereotypes or insensitive phrasing.

Strategies for enhancing the reliability of generative AI responses include using tools that

- Allow the user to provide credible and relevant sources for the LLM to use to generate a response

- Facilitate retrieval-augmented generation— RAG—where the generative AI tool can reference a trusted, verified, source of information to inform responses

- Perform web searches and provide publicly available web links to referenced material in generated responses, including those from ASHA or the American Medical Association.